The primary aim of this report is to demonstrate and discuss the problem of multiple and severe instances of hate speech on the YouTube platform. Aspects of this analysis apply equally to other forms of social media content and to other platforms. The report both describes the problem and provides some recommendations to mitigate and control the problem.

The primary aim of this report is to demonstrate and discuss the problem of multiple and severe instances of hate speech on the YouTube platform. Aspects of this analysis apply equally to other forms of social media content and to other platforms. The report both describes the problem and provides some recommendations to mitigate and control the problem.

This report demonstrates how one user, with one account, was able to upload 1710 videos in a single day. The vast majority of these videos promoted online hate. While a direct outcome of this report has been the closure by YouTube of this hate-promoting account, our primary aim in producing this report is more general in nature. Through reports such as this one, OHPI brings an empirical research approach to the study of online hate. Our research enables better policies, procedures, software and educational responses to be developed to help combat the spread of online hate and to mitigate the damage such hate causes to the fabric of our society, including to both the mental and physical health of citizens.

OHPI works with key stakeholders, including technology companies, to create systematic improvements in the way online hate is monitored and controlled. We are pleased to have worked with YouTube to resolve the particular instances of online hate documented in this report. We commend YouTube for their speedy and effective response to this report; the company took action within 24 hours of receiving a pre-release copy of this OHPI report.

The contents of the videos documented in this report make an interesting study into online hate, and particularly online antisemitism, though other forms of hate are also present. The report also highlights how the user uploaded two or more copies of each unique item of content in an effort to ensure the removal of a single copy would not make the content itself unavailable. In their response to our report, YouTube highlighted their steps to combat such avoidance efforts. Removed content is now added to a list and new uploads are automatically checked against this list.

There are questions about the effectiveness of the current implementation of the list system. The sheer volume of hate material in the account this report documents should have triggered stronger automated warnings. At least one of the videos is known to have been previously removed by YouTube, though this may have been before the current system went into operation. Automatic screening to prevent re-distribution of material the company has already evaluated as hateful is an important tool in the fight against the spread of online hate, and YouTube should be both commended for this approach and encouraged to further develop and improve the technology.

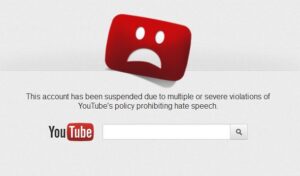

YouTube’s response in removing the account is only one part of an effective response to this incident. Equally important is the message that now appears when trying to access the account’s home page. The message reads: “This account has been suspended due to multiple or severe violations of YouTube’s policy prohibiting hate speech.” This sends a message not only to the user, but also to the public that hate speech is not acceptable on the YouTube platform. This educational response can play an important role in changing online values. The effect of this message could be strengthened if the addresses of the individual videos gave a similar specific message about hate speech, rather than a generic message noting that the video is unavailable because the user’s account was suspended.

YouTube’s response in removing the account is only one part of an effective response to this incident. Equally important is the message that now appears when trying to access the account’s home page. The message reads: “This account has been suspended due to multiple or severe violations of YouTube’s policy prohibiting hate speech.” This sends a message not only to the user, but also to the public that hate speech is not acceptable on the YouTube platform. This educational response can play an important role in changing online values. The effect of this message could be strengthened if the addresses of the individual videos gave a similar specific message about hate speech, rather than a generic message noting that the video is unavailable because the user’s account was suspended.

Our report was compiled during the first week after the hate speech was uploaded. After completing the report OHPI waited to see what action would be taken by YouTube in the normal course of events. As detailed in the report, at least one video was repeatedly flagged after a link to this video was disseminated via e-mail. Additionally, within the first week, a contact at YouTube was e-mailed regarding the problem by a peak Jewish community body. Three weeks later, a month after the initial upload, no action had been taken. This suggests that the regular processes may not be sufficiently effective and there may be ways to better prioritise the reviewing of flagged videos. One simple metric would be to prioritise the review of videos where the majority of viewers have flagged the video. The number of viewers would also need to play a role. Neither of these factors ought to be determinative of whether the content is reviewed manually, but they can help prioritise those videos which users, through crowd sourcing, have identified as more likely to be problematic.

While Non-Governmental Organisations who have expertise in identifying online hate, such as OHPI, can play a larger role in the identification and removal of online hate, the greater onus and ultimate responsibility for reviewing content must remain with the company. YouTube’s speedy response to our report highlights how the systematic involvement of key partners could be used to prioritise critical cases in a similar way to more advanced crowd sourcing approaches. Government agencies also have a significant role to play as detailed in the report. Ultimately, however, the input of partners needs to be an efficiency bonus and social media companies such as YouTube need to sufficiently resource their monitoring teams to ensure all complaints can be reviewed in a timely manner. Additional responses from other stakeholders may also be needed as detailed in the report.

The report is over 7MB and includes four pages of analysis as well as an appendix documenting details of the videos this account uploaded. Download the report on Multiple and Severe Hate Speech on YouTube.