The report “World Trends in Freedom of Expression and Media Development: Special Digital Focus 2015” by the United Nations Educational, Scientific and Cultural Organization (UNESCO) (2 November 2015) discussed the work of the Online Hate Prevention Institute at some length. The following are extracted from the report:

Page 28:

Online hate speech renders some legal measures elaborated for other media ineffective or inappropriate, and it calls for approaches that are able to take into consideration the specific nature of the interactions enabled by digital information and communication technologies (ICTs). There is the danger of conflating a rant tweeted without thinking of the possible consequences, with an actual threat that is part of a systematic campaign of hatred. There is the difference between a social media post that receives little or no attention, and one that goes viral. In addition, there are the complexities that governments and courts may face, for example when trying to enforce a law against a social networking platform headquartered in a different country. Therefore, while online the content of hate speech is not intrinsically different from similar expressions found off-line, there are peculiar challenges unique to it. These challenges may be identified in terms of digital permanence, itinerancy, anonymity and cross-jurisdictional character:

- First, hate speech can stay online for a long time in different formats across multiple platforms. Andre Oboler, CEO of the Online Hate Prevention Institute, has stated: ‘The longer the content stays available, the more damage it can inflict on the victims and empower the perpetrators.’ Platforms’ architectures may allow topics to stay alive for shorter or longer periods. Twitter’s conversations organized around trending topics may facilitate the wide and quick spread of hateful messages, but they also offer the opportunity for influential speakers to shame such messages and possibly end popular threads. Facebook, on the contrary, may allow multiple threads to continue in parallel and go unnoticed outside of a narrow community, creating longer-lasting spaces when certain groups are vilified.

Page 46–48:

4.3 LOBBYING PRIVATE-SECTOR COMPANIES

Various organizations that have combatted hate speech in other forms or have defended the rights of specific groups in the past have found themselves playing an increasingly important role online. This trend is especially evident in developed countries where internet penetration is high, and where private-sector companies are key intermediaries. This section examines campaigns and initiatives in the United States of America, Australia, and the United Kingdom of Great Britain and Northern Ireland, where issues of online hatred have emerged with regard to religion, race and gender. Organizations like the USA-based Anti-Defamation League (ADL) and Women, Action and the Media (WAM!); the Australian-based Online Hate Prevention Institute; the Canada-based The Sentinel Project; and the UK-based Tell MAMA (Measuring Anti-Muslim Attacks) have become increasingly invested in combating online hate speech by putting pressure on internet intermediaries to act more strongly against online hate speech, and by raising awareness among users.In some cases, organizations have focused on directly lobbying companies by picking up specific, ad-hoc cases and entering into negotiations. This process may involve these organizations promoting their cases through online campaigns, organized barrages of complaints, open letters, online petitions and active calls for supporters’ mobilization both online and off-line. However, it is the organizations that largely drive a specific cause. A second type of initiative promoted by some organizations is collecting complaints from users about specific types of content. This activity is particularly interesting when considered in relation to the internet intermediaries’ processes of resolving cases of hate speech. While some companies have begun to publish public transparency reports listing requests that governments make for data, information, and content to be disclosed or removed, they have not released information about requests from individual users. When individuals flag content as inappropriate, they may be notified about the processing status of their complaints, but this process remains largely hidden to other users and organizations. This has the result of limiting the possibility of developing a broader understanding of what speech individuals deem to be offensive, inappropriate, insulting, or hateful. Examples of initiatives crowdsourcing requests to take action against specific types of messages include HateBase, promoted by The Sentinel Project and Mobiocracy; Tell MAMA’s Islamophobic incidents reporting platform; and the Online Hate Prevention Institute’s Fight Against Hate. These initiatives serve as innovative tools for keeping track of hate speech across social networks and how different companies regulate it.

HateBase focuses on mapping hate speech in publically available messages on social networking platforms in order to provide a geographical map of hateful content disseminated online. This allows for both a global overview and a more localized focus on specific language used and popular hate trends and targets. The database also consists of a complementary individual reporting function used to improve the accuracy and scope of analysis by having users verify examples of online hate speech and confirm their hateful nature in a given community. Similarly, Fight Against Hate allows for reporting online hate speech on different social networks in a single platform, which helps users keep track of how many people report the hate content, where they come from, how long it has taken private companies to respond to the reports, and whether the content was effectively moderated. Finally, Tell MAMA offers a similar function of multiple site reporting in one platform, yet focuses solely on anti-Muslim content. This reporting platform also facilitates the documentation of incidents on racial and religious backgrounds for later analysis. The reports received on the platform are processed by the organization, which then contacts victims and helps them deal with the process of reporting incidents to the appropriate law enforcement authorities. The information recorded is also used to detect trends in online and off-line hate speech against Muslims in the UK.

In discussing the importance of generating empirical data, the Online Hate Prevention Institute’s CEO, Andre Oboler, stated that platforms like these offer the possibility of making requests visible to other registered users, allowing them to keep track of when the content is first reported, how many people report it, and how long it takes on average to remove it. Through these and other means, these organizations may become part of wider coalitions of actors participating in a debate on the need to balance between freedom of expression and respect for human dignity and equality. This is well illustrated in the example below, in which a Facebook page expressing hatred against Aboriginal Australians was eventually taken down by Facebook even though the page did not openly infringe upon Facebook’s terms of service, but because it was found to be insulting by a broad variety of actors, including civil society and pressure groups, regulators, and individual users.

This case illustrates how a large-scale grassroots controversy surrounding online hate speech can reach concerned organizations and government authorities, which then actively engage in the online debate and pressure private companies to resolve an issue related to online hate speech. In 2012, a Facebook page mocking indigenous Australians called ‘Aboriginal Memes’ caused a local online outcry in the form of an organized flow of content abuse reports, vast media coverage, an online social campaign and an online petition with an open letter demanding that Facebook remove the content. Memes refer in this case to a visual form for conveying short messages through a combination of pictures with inscriptions included in the body of the picture.

The vast online support in the struggle against the ‘Aboriginal Meme’ Facebook page was notable across social media and news platforms, sparking further interest among foreign news channels. In response to the media commotion, Facebook released an official statement, recognizing that some content may be ‘controversial, offensive or even illegal’. In response to Facebook’s statement, the Australian Human Rights Commissioner asserted his disapproval of the controversial page and of the fact that Facebook was operating according the First Amendment to the U.S. Constitution on a matter that involved an Australian-based perpetrator and Australian-based victims.

The online petition was established as a further response to Facebook’s refusal to remove the content by automatically answering several content abuse reports with a standard statement. The open letter in the petition explained that it viewed the content as offensive due to repeated attacks against a specific group on racist grounds and demanded that Facebook take action by removing the specific pages in question and other similar pages that are aimed against indigenous Australians. Facebook temporarily removed the pages for content review. After talks with the Race Discrimination Commissioner and the Institute, Facebook concluded that the content did not violate its terms of services and allowed the pages to continue under the requirement of including the word ‘controversial’ in its title to clearly indicate that the page consisted of controversial content.

A second phase came after a Facebook user began targeting online anti-hate activists with personal hate speech attacks due to the ‘Aboriginal Memes’ case. Facebook responded by tracing and banning the numerous fake users established by the perpetrator, yet allowed him to keep one account operational. Finally, in a third phase, Facebook prevented access to the debated page within Australia following publically expressed concerns by both the Race Discrimination Commissioner and the Australian Communications and Media Authority. However, the banned Facebook page remains operational and accessible outside of Australia, and continues to spread hateful content posted in other pages that are available in Australia. Attempts to restrict specific users from further disseminating the controversial ‘Aboriginal Memes’ resulted in a 24-hour ban on these actors from using Facebook.

Page 51–52

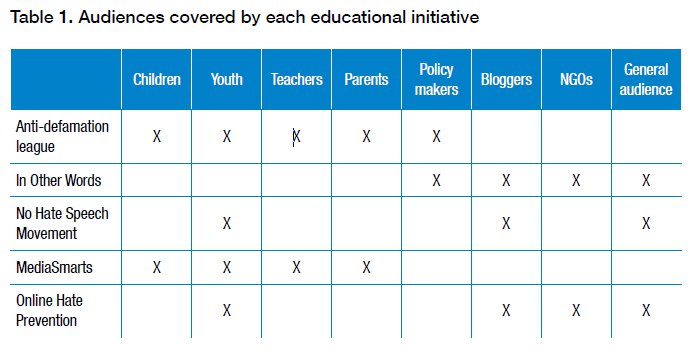

Certain knowledge and skills can be particularly important when identifying and responding to online hate speech. The present section analyses initiatives aimed both at providing information and practical tools for internet users to be active digital citizens. Projects and organizations covered include:

- ‘No place for hate’ by the Anti-Defamation League (ADL), USA;

- ‘In other words’ project by the Provincia di Mantova and the European Commission;

- ‘Facing online hate’ by MediaSmarts, Canada;

- ‘No hate speech movement’ by the Youth Department of the Council of Europe;

- ‘Online hate’ by the Online Hate Prevention Institute, Australia.

Even though the initiatives and organizations presented have distinctive characteristics and particular aims, they all emphasise the importance of MIL [Media and Information Literacy] and of educational strategies as effective means to counteract hate speech. They stress the ability of an educational approach to represent a structural and sustained response to hate speech, considered in comparison to the complexities involved in decisions to ban or censor online content or the time and cost that it may take for legal actions to produce tangible outcomes.

…

[Comment: OHPI does also engage with policy makers, mostly through our in depth reports which include recommendations for policy makers, as well as through engagement in international forums]

Page 54:

The reporting platform designed by the Online Hate Prevention Institute enables individuals to report and monitor online hate speech by exposing what they perceive as hate content; tracking websites, forums and groups; and reviewing hateful materials exposed by other people.