In this briefing we discuss the use of automation to promote White Supremacy on Twitter. This case study shows Twitter’s lack of action on even the most basic automated tools to tackle the abuse of their platform to promote the sort of extremism which has led to multiple deadly terrorist attacks in recent years, as we documented in our report into the Halle attack and online extremism.

This briefing is part of our work for a submission to the Inquiry into Extremist Movements and Radicalism in Australia being held by the Parliamentary Joint Committee on Intelligence and Security. We published a companion article today on why more need to be done by both Twitter and the Australian Government to stop extremism,

Automated White Supremacy

As of the start of 2021, Twitter has still not implemented automated systems to remove white supremacy. This became startlingly obvious through one Australian account the Online Hate Prevention Institute discovered. The account highlights problems not only at Twitter, but also with the web-based service platform If This Then That.

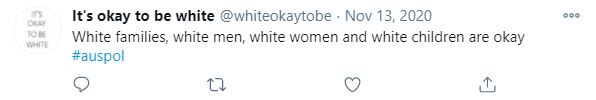

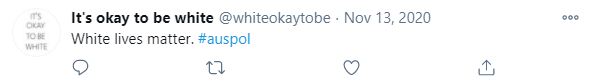

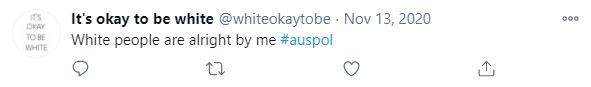

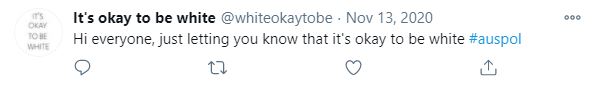

The account in question, with the name “It’s okay to be white” and the handle @whiteokaytobe, was created in March 2018 and has made 11,000 tweets. Examining the most recent 3200 tweets, all of them were posted using If This Then That. Across all 3200 tweets there were just 12 different messages repeated over and over. In an attempt to flood the discussion of Australian politics on Twitter with White Nationalism, every one of them used the hashtag #auspol.

Here’s the tweets in order of frequency:

#1 Tweeted 340 times, “It’s okay to be white #auspol” + an image reading “It’s okay to be white”

#2 Tweeted 303 times, “God loves white people #auspol”

#3 Tweeted 303 times, “White people have interests #auspol”

#4 Tweeted 251 times, “White people are awesome! #auspol”

#5 Tweeted 251 times, “I love white people #auspol”

#6 Tweeted 251 times, “White people are our greatest strength! #auspol”

#7 Tweeted 251 times, “The right to be white must be defended #auspol”

#8 Tweeted 251 times, “White families, white men, white women and white children are okay #auspol”

#9 Tweeted 250 times, “White lives matter. #auspol”

#10 Tweeted 250 times, “White people are alright by me #auspol”

#11 Tweeted 250 times, “It’s okay to be white #auspol”

#12 Tweeted 249 times, “Hi everyone, just letting you know that it’s okay to be white #auspol”

Where does this comes from?

In the Australian Parliament in 2018 a One Nation motion promoting a White Nationalist slogan, “It’s ok to be white”, was initially supported by the Government in the Senate. Described as a mistake, the Prime Minister called the move “regrettable” and the Government leader in the Senate issued an apology. This incident brought the phrase into mainstream awareness in Australia. It originated in the United States, appearing on posters particularly around university campuses.

Social media platforms like Facebook initially banned White Supremacy, and later extended the ban to also cover White Nationalism and White Separatism. A Civil Rights audit commissioned by Facebook found the implementation of this policy had been too narrow, allowing a range of equally harmful content to remain online. Still, Facebook’s efforts even at that stage were far better than where Twitter is today and Facebook has kept improving its automated efforts.

The different response on Twitter is unsurprising given the “love story” between White Supremacy and Twitter which was well documented by Dr Jessie Daniels back in 2017. In her work Daniel’s noted how then Candidate Trump retweeted multiple tweets from white supremacist Bob Whitaker’s Twitter account @WhiteGenocideTM.

It was Whitaker coined the term “White Genocide” which went on to become a slogan used in multiple deadly terrorist attacks carried out by White Supremacists – including Christchurch where the attacker’s manifesto explicitly included a heading “This is WHITE GENOCIDE”. Candidate Trump’s multiple retweets of the @WhiteGenocideTM was a key gateway to the alt-right support he later received. Twitter was a key platform for these White Nationalists.

Twitter’s Resistance to Action

For a long time Twitter resisted increasing pressure to ban White Supremacy. One insider suggested it was out of concern that such a ban would disproportionately hit Republicans during the election campaign. Others have argued it is harder to identify White Supremacy than, for example, ISIS terrorism.

By late 2019 there was a petition with over 110,000 people calling on Twitter to ban White Nationalism from the platforms and protests outside Twitter’s office. Twitter responded that it banned 93 White Nationalism related groups from the site. Protesters were not satisfied and demanded that Twitter match Facebook’s commitment against White Nationalism.

In July 2020 Twitter banned David Duke, one of the most prominent White Nationalists, from its platform. Following a report that claimed a particular White Nationalist group was “rampant” on Twitter, the platform removed 50 additional White Supremacy accounts. This is a drop in the ocean.

If This Then That

This particular case is due to more than just Twitter. It also involves automation, in this case from If This Then That, a platform which provides powerful online automation capabilities. Platforms like this need their own policies and technical checks to ensure they aren’t being used to spread hate and extremism.

The terms of service of If This Then That https://ifttt.com/terms do prohibit the use of the service for hate. They state that, “You may not use the Service:… (iv) in connection with or to promote any products, services, or materials that constitute, promote or are used primarily for the purpose of dealing in:… hate materials or materials urging acts of terrorism or violence…”.

This is a good start, but without an easy way to report problem users more is needed. Ideally a data driven company like ITTT would develop their own mechanisms to automatically check content to ensure the system they provide can’t be abused to cause harm.

Until now the focus has been entirely on the front line platforms, those that display the content. The principles of compliance through design highlights how in the near future each tool in the chain will need to take appropriate steps to ensure privacy is protected, illegal content avoided and community standards upheld.

Twitter must do far more in 2021

Back in December 2017 we congratulated Twitter for starting to apply its policies on hate speech and extremism to usernames and handles, not just tweets. At the same time we were “concerned that rather than tackling the online hate which has the most impact on the public, the focus is instead drifting to those forms of hate which are the easiest and cheapest to find”. Unfortunately, that view was in hindsight overly optimistic.

Facebook has made great progress in the automated removal of hate speech, while Twitter has resisted improvements and lagged further and further behind.

With a market capitalisation of US$42 Billion, Twitter is not a small company, nor is it a start-up. It can and should be investing an appropriate amount in the development of automated systems to remove hate, and in sufficient expert staff, including locally in each country, to get on top of the problem of online hate and extremism.

The failure to detect even the most obvious and auto-repeated White Supremacy slogans demonstrates just how far behind community expectations Twitter is at the start of 2021.

Comments and Support

You can support our work tackling extremism through our new dedicated fundraiser:

You can also comment on this article in this Facebook discussion or by commenting on this tweet.

This briefing related research was prepared by Dr Andre Oboler, CEO of the Online Hate Prevention Institute.