Why would Facebook let users go through a long process to report something, only to tell them in the final step that they should have used a different process and their complaint will now not be accepted? OHPI considers this a serious design flaw which may discourage the reporting of serious complaints.

Hate speech can be posted publicly by users on their wall. When this is done all Facebook users can see it. Facebook gives an option against each post to “remove” it, but this option is poorly named. Users don;t want to “remove it” (i.e. hide it so they personally can’t see it), they want to “report it” so Facebook will deal with it.

Here is an example of what might happen…

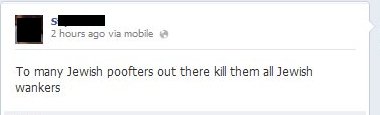

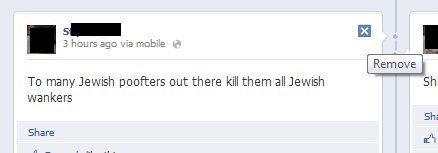

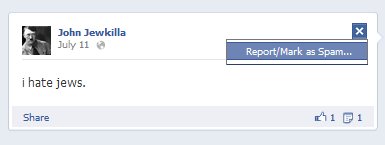

Let’s say someone posts something to their wall, which is publicly visible. For example a comment like “To many Jewish poofters out there kill them all Jewish wankers”, or “Suck him off you Jewish slut… Fuck.all jews”. A comment like the following for example:

Or

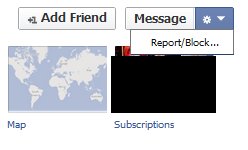

As mentioned, a user wouldn’t just want to hide this, they would want to report it. So they go to click the report option at the top of the screen.

Now you get Facebook’s “helpful” little tool to guide you through the process. First, you are asked if you simply want to block them. This stops them contacting you but does nothing to actually remove the hate. This seems to be Facebook’s preferred position, to let hate fester and tell those who have a problem with it to cover their eyes. The option to report it to Facebook is by default not selected. To proceed with a report, you need to selected it. As the report will no be processed if the complaint process is started in this way, but the complaint relates to something on the time line, it is at this point that Facebook should be clarifying this issue and providing a link to instructions for reporting content on a time line. They don’t, so we proceed…

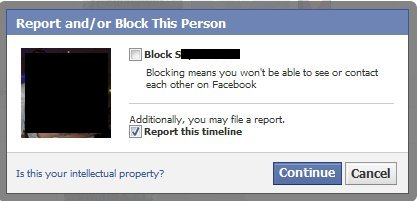

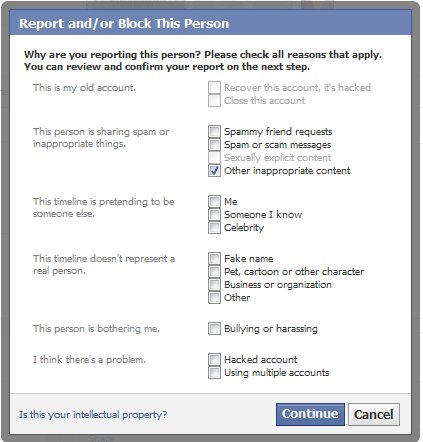

The next step asks you why you are reporting them. The majority of the focus is on privacy, identity theft and account integrity. Hate speech falls under “other inappropriate content”.

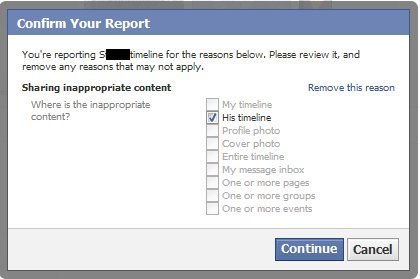

Now you are asked what it is you want to report. In this case the person posted the comments on their own time line – but remember they have also made their time line publicly visible, so this is not just something for them or their friends.

Having got this far, you might be surprised that the next step tells you that you have used the wrong tool and need to start over by reporting the specific comment on the timeline.

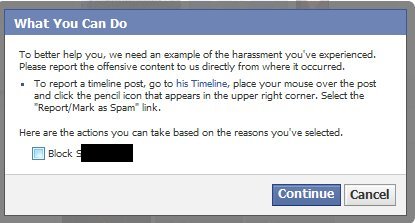

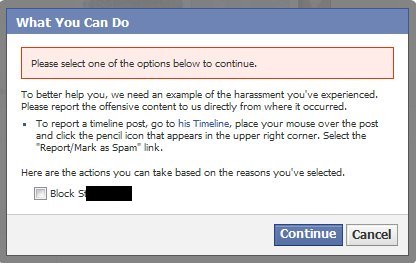

Trying to click continue doesn’t help. All this screen lets you do is block the person… which you could have done back in step 1. Remember, this is Facebook’s way of telling you to just cover your eyes. If you block the person who posted the hate speech, that is all it will do i.e. it won’t also file a report. If you try continue without blocking them, you get this:

Ok, so lets go back to the wall and try report it there. Hovering where Facebook says gives the remove option.

Clicking it gives the option to “report / mark as spam”.

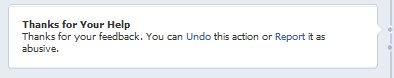

Once you do this Facebook thanks you for your “feedback” and gives you the option to now file a report. What exactly is the Feedback you just gave them if it is not a report? Do they do anything with this “feedback”? Anyway, NOW we have the option to file a report. Click report.

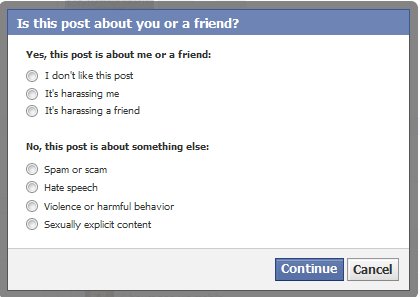

Now we enter what I will call the “standard content reporting” system. As the title points out, Facebook are now concerned about cyberbullying and privacy violations. Mostly because Governments have started holding Facebook accountable in this area. General hate speech is not top of the agenda, but it is an option.

The hate speech option, if selected, will change to a drop down box so that a complaint can be made in more specific terms.

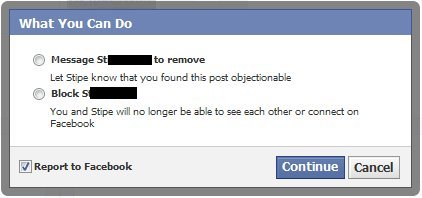

Facebook now tried to encourage us to resolve the problem ourselves. They helpfully suggest you message the person who may want to kill you and ask them to please remove their comment. I’m sure that will work Facebook. Great ideal. This option is putting people at risk of further abuse, cyberbullying and hate. If you wanted to message them you could have done that without this option. The accidental use of this option poses a serious risk to people. Facebook, please remove it.

Additionally, like before, the option to report it to Facebook is by default not selected… despite this being exactly what we originally said we wanted to do. This is subtle discouragement to try and push people away from reporting complaints. It may be particularly effective at dis-empowering those people who are seriously upset, perhaps not at their sharpest, and are needing the most help.

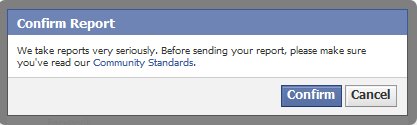

Facebook now gives explicit discouragement. Are you really sure you want to lodge your complaint? Have you read the community standards? This step is simply a waste of time.

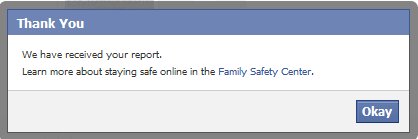

And finally we get a statement that the report has been received.

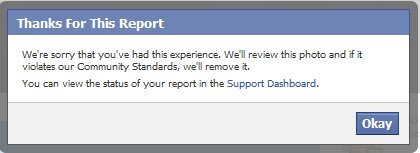

At this point we should compare the final message to the one that appears when you report an image, we wrote about that process previously.

The key differences are that the image reporting indicates Facebook will actually review the report and there is a dashboard where you can track the progress. They also seem to take the complaint about an image more seriously, the title bar uses the word report. The message acknowledges the horrible experience the user may have had. All in all, a death threat in a comment seems to be treated as a second class complaint.

Facebook needs to make it easier to report hate speech on people’s walls and needs to change its system so the image reporting system applies across the board. It also needs to streamline the process and remove steps that needlessly aim to divert those wishing to make complaints into dead ends.