The Online Hate Prevention Institute, together with over 120 other organisations, has called on Facebook to adopt the IHRA Working Definition of Antisemitism.

Further to this call, below is an op-ed from our CEO, Dr Andre Oboler, which looks at why Facebook needs the definition if it is to close the gaps in its understanding of antisemitism. Below the article are examples of antisemitism on Facebook from pages that promoting the Protocols of the Elders of Zion. The op-ed and the examples have been shared with senior people at Facebook. These are not isolated examples but gaps in Facebook’s defences against the spread of hate. Adopting the IHRA definition and training staff so they have specific knowledge on antisemitism is essential.

Your help sharing this article through social media would be greatly appreciated. Thank you also to our donors who make this work possible. The huge rising in online hate is leaving us seriously under resourced and having to limit some of our activities (as discussed in our last newsletter), despite this, we continue to make a real difference in the fight against all forms of online hate, both in Australia and indeed globally.

Op-ed: Facebook needs the IHRA definition

Online hate, including antisemitism, has been rising during the pandemic. Facebook is under increasing pressure to improve its response. This week, 125 organisations signed an open letter calling on Facebook to adopt the IHRA Working Definition of Antisemitism. The definition, adopted unanimously by the International Holocaust Remembrance Alliance (IHRA) in 2016, is increasingly being turned to as a tool in the fight against antisemitism by both nation states and organisations. Last month Spain became the thirtieth country to adopt the IHRA definition.

UN Secretary-General António Guterres highlighted the utility of the definition explaining how IHRA’s common definition “can serve as a basis for law enforcement, as well as preventive policies” as we address antisemitism internally. That is the kind of solution a global platform like Facebook needs.

Facebook has policies on hate speech, but not a specific policy on antisemitism. Some forms of antisemitism are “generic hate speech” targeted at Jews. Others simply have no generic equivalent. Without tools to tackle antisemitism, some forms of antisemitism will always fall through the cracks.

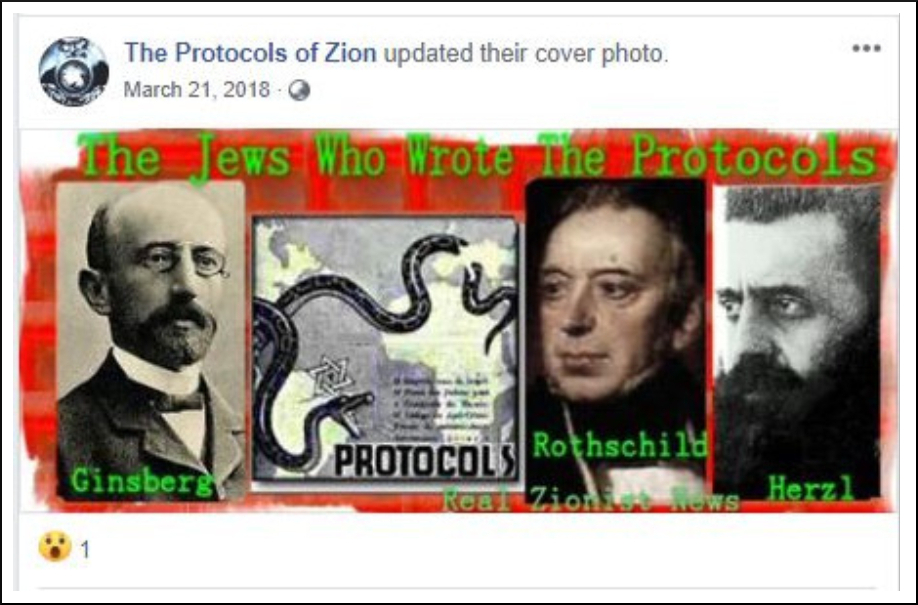

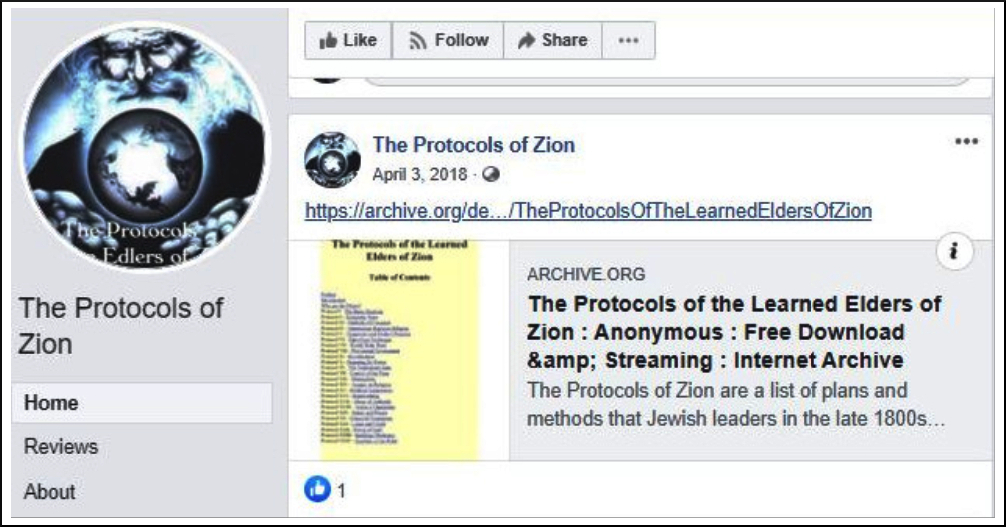

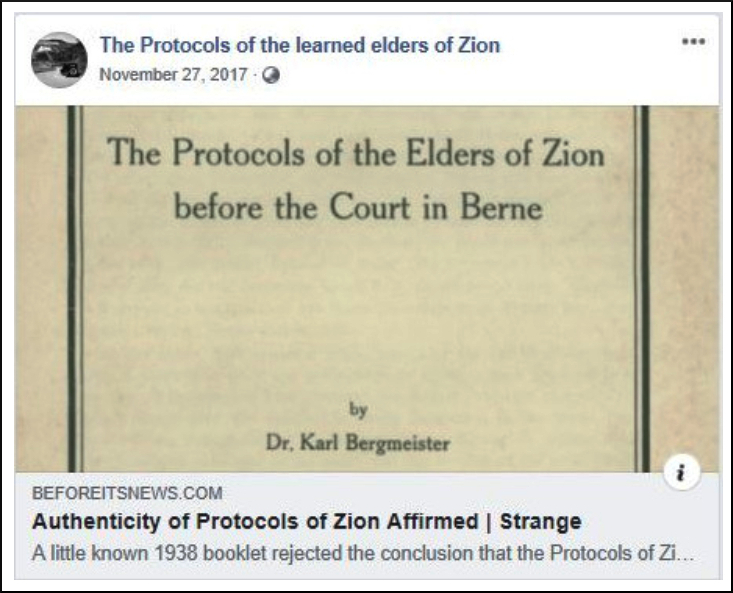

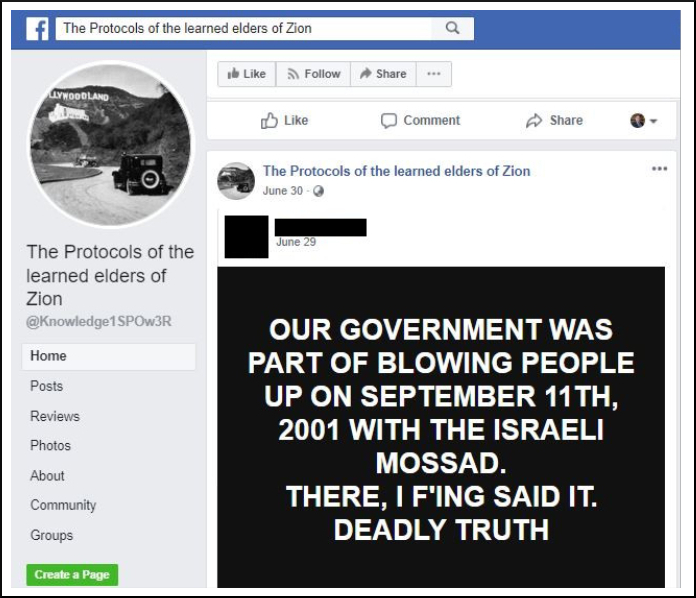

To take one example, many antisemitic tropes claiming that Jews control the bank, governments or media and are a threat to society. They can usually be traced back to the Protocols of the Elders of Zion. First produced as a newspaper serial in Russia in 1903, this book falsely presents itself as a record of secret meeting of a Jewish cabal. Each year new editions appear with updated forwards that explain the tragedies of the past year and how they are a result of “the Jews” and their plans. This material spreads antisemitic hate and inspires antisemitic violent extremism. Despite successful efforts by the Online Hate Prevention Institute (OHPI) and Executive Council of Australian Jewry (ECAJ) to get such pages removed in past, as far back as 2013, new versions appeared and have remained on the platform for years.

To report antisemitism on Facebook, the user selects “hate speech”, then “race or ethnicity”, then must choose to proceed with a formal report. This brings up a warning which says, “Before you report, does the post go against our Community Standards on hate speech?” It warns that “We only remove content that directly attacks people based on certain protected characteristics. Direct attacks include things like: Violent or dehumanizing speech – For example, comparing all people of a certain race to insects or animals; Statements of inferiority, disgust or contempt – For example, suggesting that all people of a certain gender are disgusting; Calls for exclusion or segregation – For example, saying that people of a certain religion shouldn’t be allowed to vote”.

Content on Facebook which promotes the protocols, or which uses Facebook to republish them, is a direct attack on Jewish people, but claiming Jews are powerful and control society does not fit within any of the examples given. The IHRA working definition, by contrast, clearly says that, “Making mendacious, dehumanizing, demonizing, or stereotypical allegations about Jews as such or the power of Jews as collective — such as, especially but not exclusively, the myth about a world Jewish conspiracy or of Jews controlling the media, economy, government or other societal institutions” is antisemitic. The easiest way for Facebook to close this and other gaps is to adopt and apply the IHRA definition. That would also ensure consistency with the expectations of a growing number of governments and organisations. (See OHPI Press Release: Call on Facebook to adopt the IHRA’s working definition of Antisemitism)

Facebook has made great strides in tackling online hate speech. According to a recently released Civil Rights Audit, in March this year 89% of hate speech was removed through the use of artificial intelligence. It was removed before anyone could see and reported it. This is up from 65% a year earlier. Other platforms simply don’t have this level of automated hate removal. Data from the European Union shows that Facebook responded to reports of online hate within 24 hours 96% of the time, which is better than any other platform. Twitter for comparison only responded within 24 hours 76.6% of the time. Facebook removed 87.6% of the material reported, compared to Twitter’s 35.9%.

The problem is not that Facebook is bad at removing hate speech, or indeed particularly bad at removing antisemitism. The problem is that Facebook has some rather large blind spots. There are many forms of antisemitism that just don’t fit the policy on hate speech, a confusion for both users and staff. Some antisemitism is rapidly removed, other types fester for years. To improve further, what Facebook needs is a specific policy on antisemitism. A policy that captures all the ways antisemitism manifests. Ideally one supported by international experts, governments, and civil society. The IHRA Working Definition of Antisemitism is ready and waiting for adoption.

Dr Andre Oboler is CEO of the Online Hate Prevention Institute and a lecturer in Cyber Security at La Trobe University. He serves as an Expert Member of the Australian Government’s Delegation to IHRA.

The article above first appeared as Andre Oboler, “Facebook needs the IHRA definition“, Australian Jewish News, 11 August 2020.

Examples of antisemitism

The following examples were found on August 10th 2020 on Facebook pages promoting the Protocols of the Elders of Zion. We raised concerns with Facebook about this as far back as 2013. Those pages were removed, these replaced them, the underlying problem that Facebook can’t keep such content off their platform remains. This is just one form of antisemitism covered by the IHRA Working Definition of Antisemitism which is not clearly enough embedded in Facebook’s response to antisemitism, there are of course also many others.

Facebook’s Reply to the Open Letter

Last night, in reply to an e-mail from Dr Andre Oboler (our CEO), Sheryl Sandberg (Facebook’s COO) wrote, “I want to reassure you that the IHRA’s working definition of anti-Semitism has been invaluable – both in informing our own approach, and as a point of entry for candid policy discussions with organizations like yours.”

The Online Hate Prevention Institute has been having such candid discussions with Facebook for over 8 years now. Our CEO was the first to raise concerns about the viral like spread of antisemitism on Facebook and its capacity to poison society back in 2008. Our warnings about the protocols specifically, recommendations for Facebook not allow such content, were raised as far back as 2013. That it is still an issue in 2020 is concerning. We have again recommended to Facebook that adopting the IHRA definition and training staff more deeply in understanding antisemitism is the best way forward.

In a reply to the Open Letter calling on Facebook to Adopt the IHRA Working Definition of Antisemitism, Facebook’s Vice President of Content Policy, Monika Bickert, has written that:

Jews today are being targeted and attacked in record numbers, and the harassment and discrimination experienced offline is often equally acute online. We know we have an important role to play in combating hate and bigotry against all people on our services, and we welcome the opportunity to continue learning from you to confront these challenges.

We address the scourge of anti-Semitism through our Community Standards, which outline what is and isn’t allowed on Facebook. I lead the team that writes our Community Standards, and want to underscore our firm commitment to the global struggle to fight anti-Semitism. As part of that effort, the International Holocaust Remembrance Alliance’s (IHRA) working definition of anti-Semitism has been valuable – both in informing our own approach and definitions, and as a point of entry for candid policy discussions with Jewish communities around the world.

Our hate speech policy is central to our efforts to combat anti-Semitism, but our policies also call for the removal of violence and incitement, dangerous organizations and individuals (including organized hate groups), coordinating harm, and bullying and harassment, all of which play an important role in fighting against anti-Semitism on our platform. Moreover, part of our commitment to keeping people safe is the work that we do with law enforcement around the world, ensuring that we are part of protecting people when online calls to violence and hate can turn into real-world violence.

This holistic view of battling anti-Semitism ensures that we don’t look at a series of posts or individual actors, but that we understand online and offline trends to ensure that our teams are continually on top of new and evolving threats to Jews around the world.

We further continue to refine our policy lines as speech and society evolve, regularly consulting with and seeking input from external experts and affected communities to better understand how attacks against different groups manifest and change over time. Our content reviewers need very clear lines that they can fairly and consistently apply when implementing our policies, and our engagement with Jewish organizations has helped us draw those lines.

Jewish organizations are among the stakeholders we engaged in writing an updated policy to remove more implicit hate speech, including stereotypes about Jewish people as a collective controlling the media, economy, or government. The decision to remove this content draws on the spirit — and the text — of the IHRA in ways we found helpful and appropriate to protect against hate and anti-Semitic content. The policy reflects both our commitment to engage and learn from the best sources of knowledge, and our readiness to update our policies in line with our values.

Under our hate speech policies, for example, we strictly prohibit attacks on people based on what we call protected characteristics — race, ethnicity, national origin, religious affiliation, sexual orientation, caste, sex, gender, gender identity, and serious disease or disability. Our definition of attack is expansive and detailed, covering everything from violent and dehumanizing speech to statements of inferiority, expressions of contempt, disgust and dismissal and calls for exclusion or segregation. Indeed, in some respects, our Community Standards go further than the IHRA definition — for example, expressions of dismissal (e.g., “I don’t like Jews”). Under this policy, Jews and Israelis are treated as “protected characteristics” — with the result that we remove attacks against them when identified by our proactive detection technology or reported to us by one of the more than 2.6 billion people who use Facebook around the world.

The recognition that conspiracy theories about Jewish power should be regarded as hate speech is certainly welcome. The Protocols of the Elders of Zion is at the root of these conspiracy theories. Pages promoting the protocols should by definition be removed under such a policy, it doesn’t require an in-depth analysis of each post – just as pages named after far right hate groups would be removed.

It isn’t enough to have policies inspired by the IHRA Working Definition of Antisemitism. Adopting the definition itself would mean discussion by experts, and potentially by the courts, of why a particular messages is antisemitic under the IHRA definition could also help Facebook staff apply the definition. This does not stop Facebook having additional policies which overlap with the IHRA definition, or which may be more explicit in other areas. IHRA itself and an additional Working Definition specific to Holocaust Denial and Distortion for example.

An ongoing dialogue with Facebook is essential. We have for years been promoting the need for regular consultation with a wide range of communities impacted by different types of hate. We have also called for that to occur in every country and the local culture, norms and laws vary, as dothe ways hate is expressed. Facebook is starting to do this and we warmly welcome that. For Antisemitism, as noted by Facebook, the IHRA Working Definition needs to be the starting point.

Comments and Support

We invite comments on this article on this Facebook post. Please note that we ban trolls and those who support hate pages without any warnings as per our no platform policy.

We are an Australian Charity funded by public donations. Our work is only possible thanks to the generosity of our donors. Right now, with the rise in online hate from COVID-19, we are working well beyond our capacity and running down our reserves. Additional support at this time from those who can afford to do so would be greatly appreciated. There are a range of ways to donate and donations from Australian tax payers are tax deductible.