Since posting Wednesday’s live interview on ABC News with our CEO, Dr Andre Oboler, we have had to ban two people from our Facebook page.

Our Facebook page is not a space for people to argue against our mission, or to promote their desire for a right to abuse others. It is a safe space for our supporters and for members of the public to learn more about us. Under our “No Platform Policy” we ban both hate and those who are associated with hate groups. The policy explains exactly why we do this.

The Comments We Removed

In this case the users we banned said something that we believe deserve some further attention.

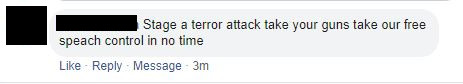

The first one tells us to “Stage a terror attack take your guns take our free speech control in no time”. The suggestion that those working to tackle extremism would or should create false incidents is deeply concerning. It fails to understand just how serious extremism is. This isn’t about debating tactic or winning political arguments, it is about public safety. This comment is right out of order.

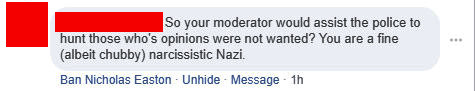

The second comment, from a different user, say “So your moderator would assist the police to hunt those who’ opinions were not wanted? You are a fine (albeit chubby) narcissistic Nazi”. What OHPI raised was the idea of new tools that could increase cooperation between those who see content inciting violence and the police who have the responsibility to keep the public safe. This is not about opinions. Those who would seek to undermine the ability of the police to receive information they need to prevent violent incidents are supporting violent extremism and undermining public safety.

Empowering Moderators

What we actually advocated in the interview was for Facebook to give new tools to page moderators and community managers. Tools that would ensure they were notified when their audience started reporting comments left by other users. This would let the moderators review those comments and decide if they wish to remove them. They could potentially do this before Facebook itself reviewed the comments, speeding up the process.

Page moderators and community managers are already reviewing the comments made on their content. They are already hiding or deleting content that violated their own policies. Right now they do this in a way which is disconnected from the complaints system. Integrating the two would increase the chance that major breaches are resolved more quickly.

Supporting Police

We also suggested that while reviewing reported content, if the content had been reported as involving serious threats of violence, the moderator should be presented with a button which would remove the material from the page, but also securely store it and add it to a list for the relevant police to review. This could give police the upper hand in preventing some acts of violent extremism.

Who Opposes This?

The two comments we removed came from different people, but the common link was that they liked the same political lobbying group.

The group concerned has been advocating against anti-discrimination laws for some time and warning of a chilling effect on free speech. Those who have been absorbing this message and seeing everything through an adversarial political lens need to take a step back. They need to avoid going so far that their support for freedom of speech becomes so extreme it turns into support for violent extremism.

Groups advocating for greater freedom of speech need to make clear to their supporters what they mean by that. They need to make it clear that it does not mean no limits at all – specially hen it comes to speech which incites violence.