While the most widely regulated form of hate speech, thanks to the UN’s International Convention on the Elimination of All Forms of Racial Discrimination (ICERD), online racism continues to be a growing problem in social media. This briefing gives current examples of it across X (Twitter), TikTok and Gab. We also examine their terms of service/community standards regarding this hate.

According to ICERD, racial discrimination is:

“any distinction, exclusion, restriction or preference based on race, colour, descent, or national or ethnic origin which has the purpose or effect of nullifying or impairing the recognition, enjoyment or exercise, on an equal footing, of human rights and fundamental freedoms in the political, economic, social, cultural or any other field of public life”. – International Convention on the Elimination of All Forms of Racial Discrimination (ICERD), Article 1.1

While other types of hate speech exist, in line with ICERD when it comes to racism the categories of “race”, “colour”, “descent”, “national origin”, and “ethnic origin” are the most widely accepted. It should be noted that “race” itself is a social construct as the idea of racial science (promoted by groups such as the Nazis) has long been disproven as pseudoscience, a misuse of science to justify racial discrimination. Race in this context therefore means the group to which others perceive a person belongs.

X (formerly Twitter)

On X the rule against racism can be found in the Hateful Conduct Policy. This policy prohibits direct attacks on people on a range of attributes including race, ethnicity, and national origin. Colour and descent are not specifically included, but would be covered more generally by the inclusion of race.

X explains that while it promotes free speech, “we prohibit behavior that targets individuals or groups with abuse based on their perceived membership in a protected category”. More specifically X prohibits:

Hateful references – targeting groups with content related to violence against that group (e.g. Holocaust content or lynchings)

Incitement – content that incites fear of a protected group (including through stereotypes), incites others to harass members of a protected group, or incites discrimination of a protected group

Slurs and Tropes – content using stereotypes that are harmful, degrading, or negative (this content may be removed or have its visibility reduced)

Dehumanization – promoting the idea members of a protected group are less humans, animals, or vermin

Hateful Imagery – logos, symbols, or images that promote hostility against a group

X also explicitly prohibits hateful profiles, the use of prohibited content in the profile picture, banner, username or bio.

Despite these rules a significant amount of racist content can be found on X including the following:

This post promotes the false claim that certain countries are “white countries” and that others do not belong in them. The green poster promotes a negative stereotype of Black people as violent, while the comment below (the blue poster) focuses on the black man at the front of the group in the image. The image itself is racist in a broader sense showing a range of stereotypes of minority groups, all rushing into the “white countries”.

Another example uses a meme based on WWE Wrestlemania 36’s main event, the Boneyard Match of The Undertaker vs Aj Styles. The match went viral on social media and became a meme template. In the example (below) a promotional image of the two wrestlers is used to present “Black out on bail” as attacking and beating up “Innocent Americans”. The racism is both the presentation of Black people as violent, but also the presentation of one as “Black” and the other as “American”, implying “real Americans” aren’t black.

Gab.com

Gab has free speech as its core principle, stating in its moderation and reporting features that it “follows the law as defined by the U.S. Supreme Court and the First Amendment #1A”.

The First Amendment to the U.S. Constitution states that “Congress shall make no law regarding… free speech” which the U.S. Supreme Court has intepreted as meaning that hate speech is constitutionally protected and Congress (and other US lawmakers) cannot make laws that would make hate speech illegal.

As Gab will not implement any rules beyond those strictly required by US law, it allows racial slurs and other forms of hate speech. Gab has the following to say about hate speech in its Terms of Service:

Our policy on “hate speech” is to allow all speech which is permitted by the First Amendment and to disallow all speech which is not permitted by the First Amendment. We allow hate speech, as defined in New York law, if it is lawful because it is lawful. We reserve the right to remove speech when it is unlawful because it is unlawful.

We will not be tracking “hate speech,” providing any mechanism to report it specifically, nor responding to anyone who reports it, because the Constitution and Federal law protect our right to do so and forbid New York from legislating otherwise. If users wish to file a report for content which is objectionable they may do so with our moderation team via the usual channels. If they report conduct which is “hateful conduct” under New York law but which is lawful under the First Amendment they should expect that no action will be taken by us. We have the ability to respond to moderation reports but never do so.

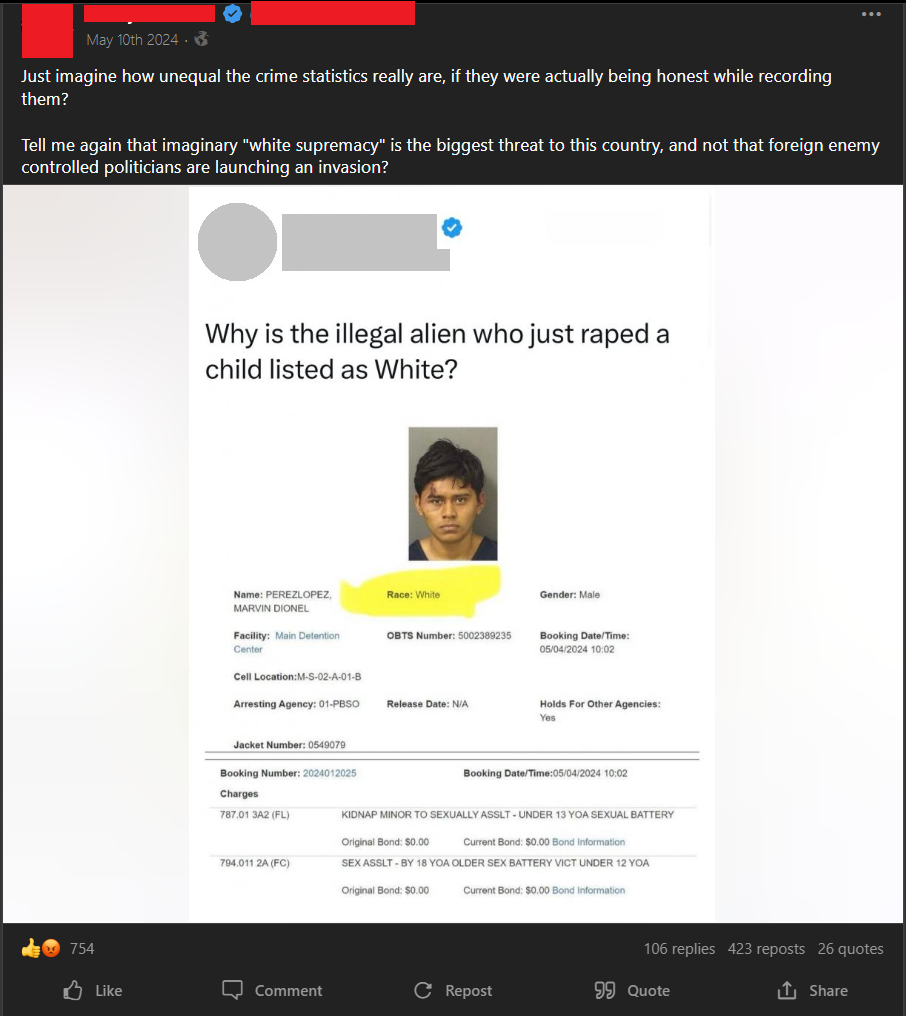

In May 2024 Marvin Perez-Lopez, an undocumented immigrant in the US from Guatemala, was arrested for allegedly kidnapping and sexually assaulting a minor. According to news reports, he has pled guilty to the offences. When the arrest report came out, it was noticed that his race had been listed as “White”. As this report became widespread on social media, conspiracies about white crime stats being inflated emerged along with many racist comments.

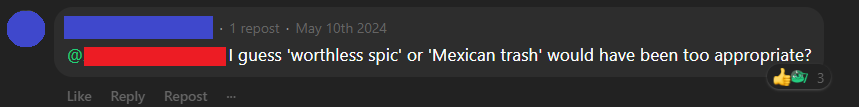

This user incorrectly identified the man as a Mexican. The term “spic” refers disparagingly to a Spanish-speaking person from the central or South American regions.

This commenter references a popular racist conspiracy that a secret global Jewish conclave controls positions of power around the world. They also mention a conspiracy to tarnish the image of white people. The term “shitskins”, used to close the comment, refers to any person of dark skin, comparing the colour of skin to the colour of faeces.

This user had a lot to say across multiple posts, dehumanising and degrading black people mostly but other groups. Here are 4 examples of comments made on 4 different posts. There are many more.

This comment dismisses the value of I.Q. (intelligence quotient) in favour of “pattern recognition” which technically would fall under I.Q. in an attempt to disregard high I.Q. scores by nonwhites. They subsequently attest that if true statistics of the crimes committed by nonwhites were presented, we would all be hunting down nonwhite people which the commenter dehumanises as animals.

This comment is in response to an incident that occurred on 8 March 2024. A video of a brawl near a school in St. Louis, Missouri circulated on social media. A girl received a serious head injury when another girl, labelled as a bully by the news article allegedly bashed her head repeatedly on the concrete floor. Generalising from the skin colour of the alledged purpetrator and victim, this comment calls for black people to be treated as inferior to white people and to classify them as subhuman, calling those who believe in equality for all races “brainwashed”.

This comment once again refers to the conspiracy of a global Jewish conclave controlling the world. It also labels Jews as satanic, a classic form of demonisation.

This comment promotes the idea that white people are superior to all other races and that inferior races should aspire to be as good as whites.

TikTok.com

TikTok’s Community Guidelines are much broader in their scope with multiple pages and links relating to content rules. The rules regarding racism are found in the “Hate Speech and Hateful Behaviour” subsection of the Safety and Civility guidelines which prohibits hate speech, hateful behaviour, or promotion of hateful ideologies targeting protected groups. This, coupled with their inclusion principles, translates to less racism on this platform. Racism, however, does appear in video comments, particularly on news stories.

The following are comments taken from 2 news stories and 2 BLM posts.

Example 1

November 4 2023 a fight broke out at the Coronado Center in Albuquerque, New Mexico between 4 people. Reports indicate that one person drew a pistol and ran with it through the center, discharging a single shot in the parking area of the center. This comment on a news post portrays the BLM (Black Lives Matter) movement as an organisation covering for black criminals and trying to get them off for crimes committed.

Example 2

On April 13 2024 police responded to an incident at a mall in Bondi junction in Sydney, where a man had stabbed multiple people. According to news reports 6 were killed and 8 others injured. One of the victims, a 9 month old baby, was taken to hospital in critical condition. The attacker was later identified as a Queenslander from Toowoomba, he is not Jewish. This commenter on a news channel on TikTok covering the story was quick to make a generalisation about Israeli-Jews (who has nothing to do with this incident) saying only they would stab a baby. It reflects antisemitic demonisation of both Jews and Israelis.

Example 3

On a post explaining the statement “black lives matter” to mean that black lives matter as much as any other life, a commenter felt obliged to redefine the BLM acronym to reflect their personal view that black people are criminals who loot and murder and play victim to defend their crimes.

Example 4

A black poster attempting to explain the statistics of black people committing crime in the U.S. attributed the disparity in the statistics to a number of possible causes relating to decisions made by black people themselves. For his analysis he has been widely criticised and attacked, including this person who has labeled him as a “bad Black person” for not standing in solidarity. Accused him of thinking he was “white and Jewish” is itself a form of racism. It seems to be using “white and Jewish” to mean privilidged and not understanding racism. The phrase itself demonstrates a level of ignorance about both Jewish people and antisemitism in the United States.

Conclusion

There will always be those who try to express hatred online. They constantly innovate new ways to bypass security features, invent new derogatory terms that are not yet filtered, and sometimes move to platfroms like Gab that explicitly allow hate speech. While most platform have policies regarding hateful content, implementing those policies effectively remains a challenge. There is an arms race between those seeking to promote hate, including racism, and those seeking to create inclusive online environments without hate.

Engaging the online communities in tackling hate is crucial. Finding and reporting content is the most effective way to identify the latest iterations of hatred and discrimination. AI can help find more well known expressions of hate, and can be trained on known examples, but community reporting and trust and stafety team review is essential to remain current in an ever changing online environment.