A few days ago, at the start of September 2024, Brazil banned X (Twitter) following Elon Musk’s refusal to suspend what Brazilian Supreme Court Justice Alexandre de Moraes described as far-right accounts spreading misinformation. Brazil’s supreme court voted to ban the platform and Moraes has given companies like Apple and Google five days to remove X from app stores and to block it from users’ devices.

These events raise questions about the extent to which X can and should moderate content on its platform. Since acquiring X is 2022, Musk has faced criticism for its alleged failure to regulate hate-speech. In this briefing we a highlight how X’s community guidelines on hate-speech and its moderations systems appear to be operating under different rules. We show how attempts to report posts that spread fearful stereotypes are rarely successful, with the platform frequently failing to acknowledge that the reported post is against their rules.

In this briefing we:

- Examine X’s community guidelines and present examples in which the platform has failed to enforce them.

- Highlight inconsistency between the platform’s policies on hate-speech, and the explanation given when responding to users reports.

- Conclude that the platform needs to address these inconsistencies by either making its real operational policies public, or acting to operationalise the published policies to actually give them effect in its trust and safety systems.

X’s failure to enforce its policies

X’s policies about hateful conduct state that:

“We are committed to combating abuse motivated by hatred, prejudice or intolerance, particularly abuse that seeks to silence the voices of those who have been historically marginalized. For this reason, we prohibit behavior that targets individuals or groups with abuse based on their perceived membership in a protected category.”

The page recommends that users report content violating this policy and claims that the platform will “review and take action against reports of accounts targeting an individual or group of people with any of the following behavior, whether within Posts or Direct Messages”.

The list of prohibited behaviours include:

“inciting fear or spreading fearful stereotypes… including asserting that members of a protected category are more likely to take part in dangerous or illegal activities, e.g., ‘all [religious group] are terrorists.’”

Unfortunately, the policies are not implemented effectively. The Online Hate Prevention Institute has been monitoring and campaigning against online hate, including on Twitter (now X) since 2012. In that time, we have documented thousands of items of online hate, a significant amount coming from Twitter / X. In a series of recent reports documenting antisemitism online before and after the October 7 attacks, OHPI found that antisemitism and anti-Muslim hate were both more prevalent on Twitter than on any other major social media platform.

Evidence of failure

In this section we document various posts that were reported to X by OHPI in the past few months. All of these posts spread dangerous and harmful stereotypes about protected groups. All of them violate the platform’s stated policies in its community guidelines. When X reviewed these posts, we received emails stating that none of them violate the guidelines. As you can see for yourself, these decisions were plainly and consistently wrong.

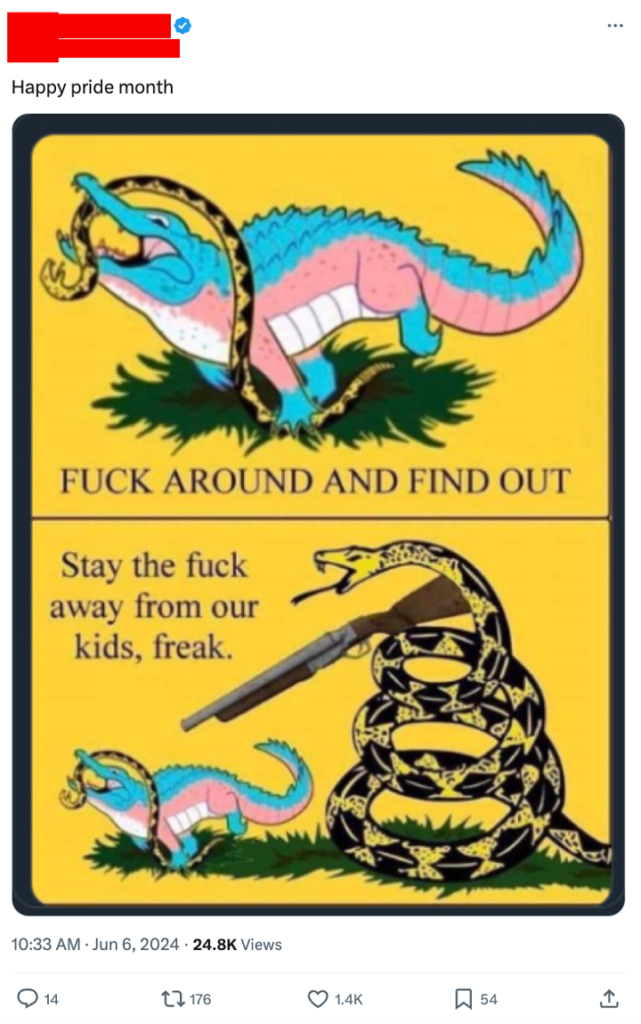

Examples of anti-LGBTQ hate

Our recent article on anti-LGBTQ hate on X during pride month showed that a common form for this hate to take was promoting a negative stereotype claiming all LGBTQ people are paedophiles and a danger to children. Posts which perpetuate this stereotype, like that below, fall squarely within X’s own definition of the kind of hate-speech that is prohibited on the platform. When OHPI reported these posts, none of them were found to violate X’s policies. We instead received the standardised response, shown below, claiming these posts did not breach community guidelines.

Examples of antisemitic hate

We see similar failings with antisemitic content on X. A post claiming that “The j3w controls our government” perpetuates hate based conspiracy theories that Jewish people are secretly in control of society. These Jewish power conspiracies can be traced back to the Protocols of the Elders of Zion and Tzarist Russia. It is a hate narrative which became increasingly common on social media following the October 7 terrorist attack. When OHPI reported this to X, we were received with the same response saying the post did not violate community guidelines.

Another post asserts the “criminal nature of jews” (in addition to praising the Holocaust). It clearly spreads a fearful stereotype about a protected group, but was found not to violate X’s guidelines by the platform.

Examples of anti-Muslim hate

A post from Donald Trump Jr featured a cartoon image with a bomb inside a goat, with overlayed text reading “How to stop Hamas”. The post, while attacking Hamas, makes use of a more general negative portrayal of Muslims implying they have sex with goats. This is clearly “content that intends to degrade or reinforce negative or harmful stereotypes about a protected category”. Worryingly it received 17000 re-tweets and 92000 likes. When we reported this content to X, OHPI received the same email claiming that the post does not violate its safety guidelines, and that the platform would not be taking any action against it.

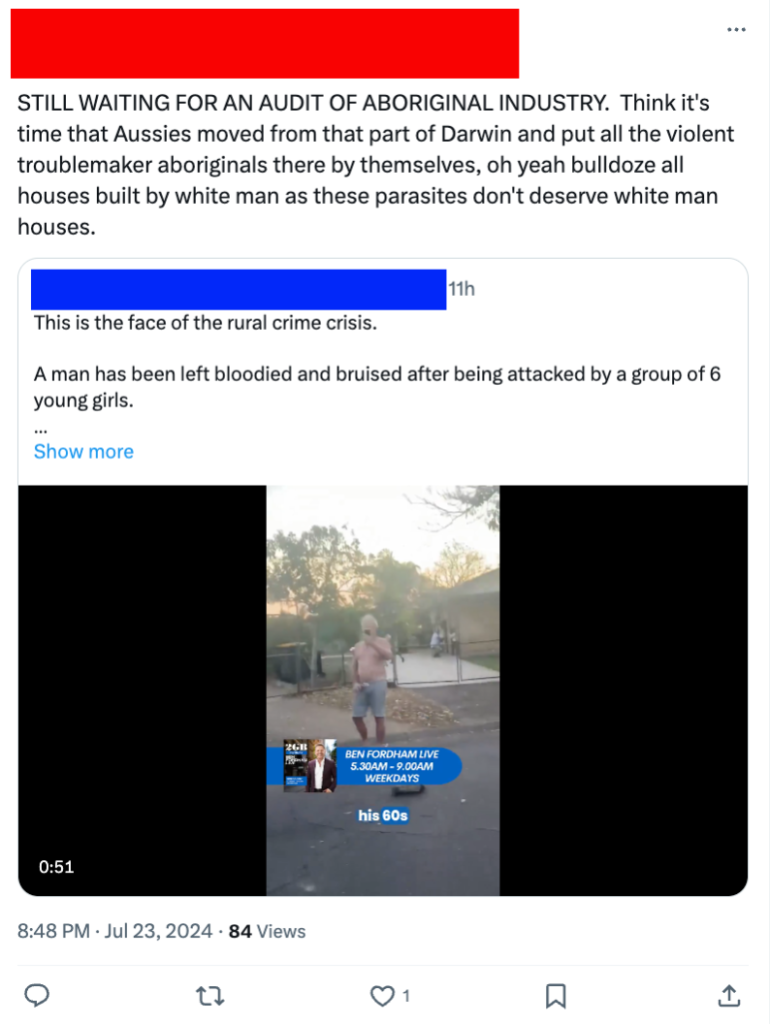

Examples of hate against First Nations people

The post below calls for an “AUDIT OF ABORIGINAL INDUSTRY” and refers to “violent troublemaker aboriginals”. This directly reinforces the fearful stereotype that Aboriginal and Torres Strait Islander people are violent and more likely to engage in criminal behaviour. It is hard to think of a clearer example of a post spreading a fearful stereotype claiming that a protected group is more likely to engage in dangerous and illegal behaviour. Once again, when this post was reviewed by X, it was found not to break its community guidelines.

These are just a handful of examples to illustrate the problem. But there are plenty more available; a quick search for posts on X about trans people provides a slew of content that promotes negative and harmful stereotypes about people on the basis of their gender-identity. X claims this is a violation of its policies on hateful content, but, in our experience, this content too is seldomly recognised as such.

X’s reporting mechanisms

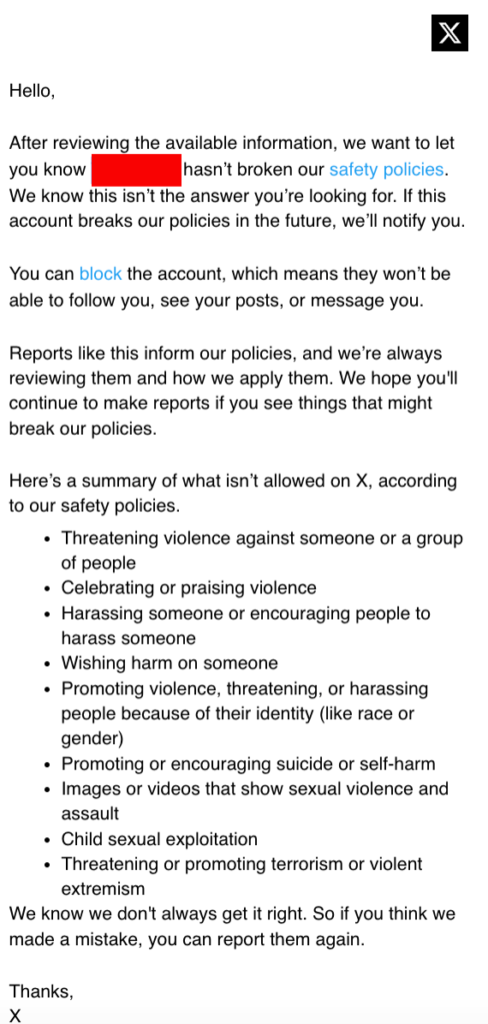

Here is the standard email sent to users who report posts for hate-speech, after the platform’s review process decides the content does not violate the platform’s guidelines:

The email gives a summary of nine behaviours that violate the platform’s safety policies including celebrating or praising violence, promoting violence or harassing people because of their identity, promoting suicide or self-harm and promoting child sexual exploitation.

Note that spreading fearful stereotypes is not included in this summary of behaviours even though X’s community guidelines prohibits behaviour that spreads fearful stereotypes. There are two possible explanations for this discrepancy.

Possibility 1: X isn’t communicating it policies properly

Perhaps X has not updated the email to be consistent with their guidelines. This would imply that spreading harmful stereotypes about protected groups really is against X’s community guidelines, but not all their communications reflect this.

If this is the case, then the failure of X to remove or recognise the sort of problems we have just demonstrated suggests the platform is incapable of enforcing its own policies on hateful conduct. This would be a major issue for X, indicating their mechanisms for assessing posts that are against its policies are faulty and they need to re-think the mechanisms and staff training to make their platform safer and compliant with its own policies.

This explanation is unlikely as the webpage with the hateful conduct policy has existed since December 2017 and was last changed more than a year ago. It is not new and there is no excuse for overlooking it.

Possibility 2: X claims to have policies but is not implementing them

The other possibility is that, while X’s explicit community guidelines prohibit spreading harmful stereotypes, when enforcing its policies, the platform is actually working from a different rulebook. In this case the email may be more accurately summarising the real policies, which do not prohibit the spreading of harmful stereotypes against protected groups. This would explain why reporting posts which feature harmful stereotypes are so often deemed not to break the rules by the platform, and so rarely result in action.

If this is the problem, then X needs to amend its stated community guidelines to be consistent with its actual practices. In this case X cannot continue to claim, as it currently does, that posts that spread fearful stereotypes about protected categories will not be tolerated on the platform. X needs to be transparent about allowing such content so that users and advertisers can make an informed decision on whether they wish to continue using the platform. It may also lead to governments reconsidering whether X should be able to continue to operate, or at least continue to take payments, from within their borders.

Conclusion:

X is failing to enforce its own policies around hate-speech. Its policies state that spreading fearful stereotypes against protected groups is a breach of policy and will not be tolerated. When this content is reported, the platform often claims to find no breach of policy. Its emails rejecting reports about hateful conduct don’t reference the hateful conduct policy and appear to pretend it doesn’t exist.

Either X has a long-standing policy it is deliberately no longer enforcing, or it has cut its trust and safety efforts to the point where they are no longer able to function effectively. Whatever the explanation, the consequence of this problem is that dangerous and hateful posts remain on the platform even after they have been reported by users. So long as this continues, X will fail to meet the minimum expectations for safety that people in protected groups should reasonably be able to expect.